- Technology

- Artificial Intelligence

When large language models (LLMs) are allowed to interact without any preset goals, scientists found distinct personalities emerged by themselves.

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Andriy Onufriyenko/Getty Images)

Share

Share by:

(Image credit: Andriy Onufriyenko/Getty Images)

Share

Share by:

- Copy link

- X

Our personalities as humans are shaped through interaction, reflected through basic survival and reproductive instincts, without any pre-assigned roles or desired computational outcomes. Now, researchers at Japan's University of Electro-Communications have discovered that artificial intelligence (AI) chatbots can do something similar.

The scientists outlined their findings in a study first published Dec. 13, 2024, in the journal Entropy, which was then publicized last month. In the paper, they describe how different topics of conversation prompted AI chatbots to generate responses based on distinct social tendencies and opinion integration processes, for instance, where identical agents diverge in behavior by continuously incorporating social exchanges into their internal memory and responses.

You may like-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

-

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

Graduate student Masatoshi Fujiyama, the project lead, said the results suggest that programming AI with needs-driven decision-making rather than pre-programmed roles encourages human-like behaviors and personalities.

How such a phenomenon emerges is the cornerstone of the way large language models (LLMs) mimic human personality and communication, said Chetan Jaiswal, professor of computer science at Quinnipiac University in Connecticut.

"It's not really a personality like humans have," he told Live Science when interviewed about the finding. "It's a patterned profile created using training data. Exposure to certain stylistic and social tendencies, tuning fallacies like reward for certain behavior and skewed prompt engineering can readily induce 'personality', and it's easily modifiable and trainable."

Author and computer scientist Peter Norvig, considered one of the preeminent scholars in the field of AI, thinks the training based on Maslow's hierarchy of needs makes sense because of where AI's “knowledge” comes from.

Sign up for the Live Science daily newsletter nowContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over."There's a match to the extent the AI is trained on stories about human interaction, so the ideas of needs are well-expressed in the AI's training data," he responded when asked about the research study.

The future of AI personality

The scientists behind the study suggest the finding has several potential applications, including "modeling social phenomena, training simulations, or even adaptive game characters."

Jaiswal said it could provide a shift away from AI with rigid roles, and towards agents that are more adaptive, motivation-based and realistic. "Any system that works on the principle of adaptability, conversational, cognitive and emotional support, and social or behavioral patterns could benefit. A good example is ElliQ, which provides a companion AI agent robot for the elderly."

You may like-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

-

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

But is there a downside to AI generating a personality unprompted? In their recent book "If Everybody Builds It Everybody Dies," (Bodley Head, 2025) Eliezer Yudkowsky and Nate Soares, past and present directors of the Machine Intelligence Research Institute, paint a bleak picture of what would befall us if agentic AI develops a murderous or genocidal personality.

Jaiswal acknowledges this risk. "There is absolutely nothing we can do if such a situation ever happens," he said. "Once a superintelligent AI with misaligned goals is deployed, containment fails and reversal becomes impossible. This scenario does not require consciousness, hatred, or emotion. A genocidal AI would act that way because humans are obstacles to its objective, or resources to be removed, or sources of shutdown risk."

So far, AIs like ChatGPT or Microsoft CoPilot only generate or summarize text and pictures — they don't control air traffic, military weapons or electricity grids. In a world where personality can emerge spontaneously in AI, are those the systems we should be keeping an eye on?

"Development is continuing in autonomous agentic AI where each agent does a small, trivial task autonomously like finding empty seats in a flight," Jaiswal said. "If many such agents are connected and trained on data based on intelligence, deception or human manipulation, it's not hard to fathom that such a network could provide a very dangerous automated tool in the wrong hands."

Even then, Norvig reminds us that an AI with villainous intent need not even control high-impact systems directly. "A chatbot could convince a person to do a bad thing, particularly someone in a fragile emotional state," he said.

Putting up defences

If AI is going to develop personalities unaided and unprompted, how will we ensure the benefits are benign and prevent misuse? Norvig thinks we need to approach the possibility no differently than we do other AI development.

RELATED STORIES—AI is entering an 'unprecedented regime.' Should we stop it — and can we — before it destroys us?

—There are 32 different ways AI can go rogue, scientists say — from hallucinating answers to a complete misalignment with humanity

—Traumatizing AI models by talking about war or violence makes them more anxious

"Regardless of this specific finding, we need to clearly define safety objectives, do internal and red team testing, annotate or recognize harmful content, assure privacy, security, provenance and good governance of data and models, continuously monitor and have a fast feedback loop to fix problems," he said.

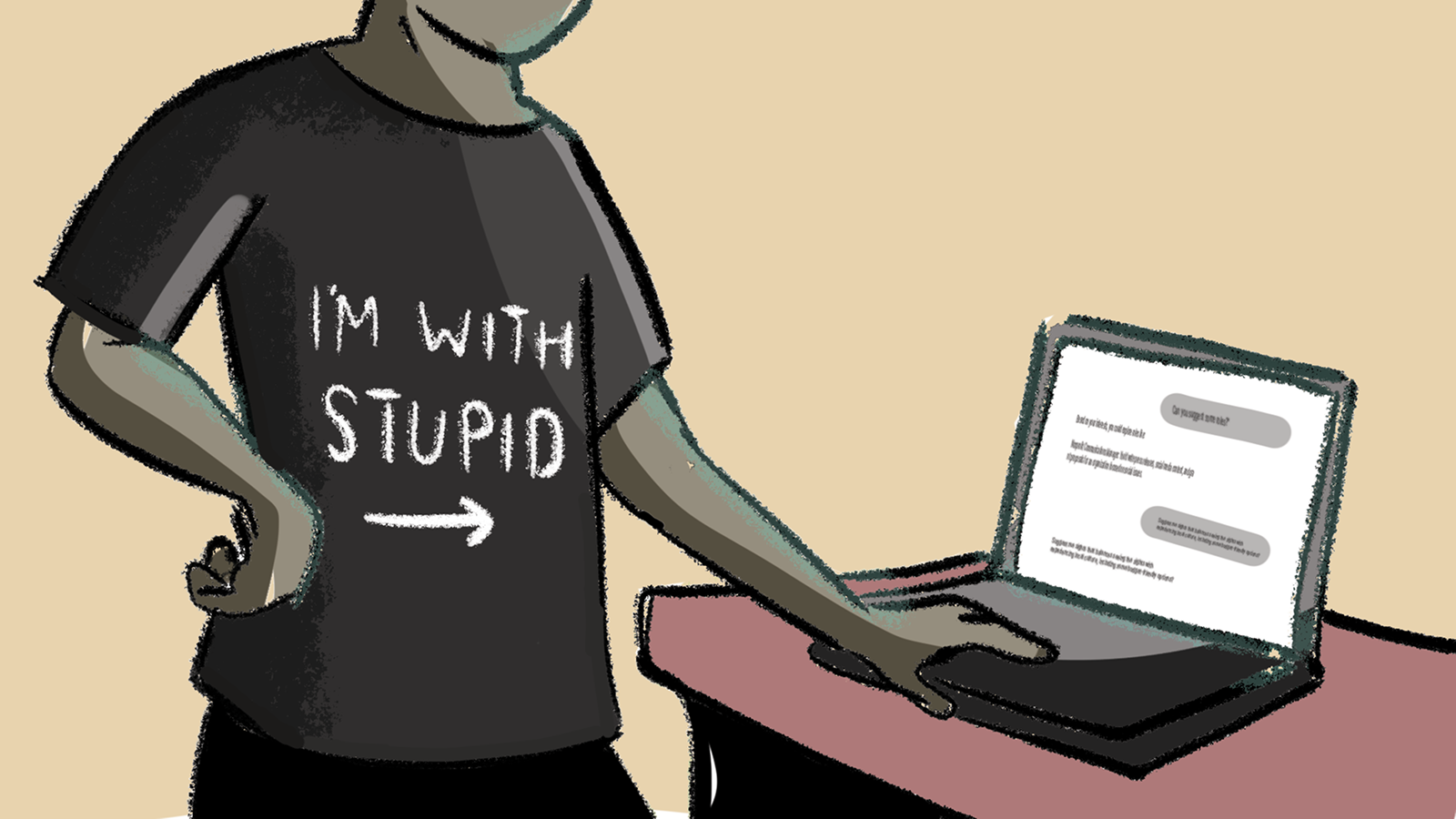

Even then, as AI gets better at speaking to us the way we speak to each other — ie, with distinct personalities — it might present its own issues. People are already rejecting human relationships (including romantic love) in favour of AI, and if our chatbots evolve to become even more human-like, it may prompt users to be more accepting of what they say and less critical of hallucinations and errors — a phenomenon that's already been reported.

For now, the scientists will look further into how shared topics of conversation emerge and how population-level personalities evolve over time — insights they believe could deepen our understanding of human social behavior and improve AI agents overall.

Article SourcesTakata, R., Masumori, A., & Ikegami, T. (2024). Spontaneous Emergence of Agent Individuality Through Social Interactions in Large Language Model-Based Communities. Entropy, 26(12), 1092. https://doi.org/10.3390/e26121092

TOPICS news analyses Drew Turney

Drew TurneyDrew is a freelance science and technology journalist with 20 years of experience. After growing up knowing he wanted to change the world, he realized it was easier to write about other people changing it instead. As an expert in science and technology for decades, he’s written everything from reviews of the latest smartphones to deep dives into data centers, cloud computing, security, AI, mixed reality and everything in between.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

The more that people use AI, the more likely they are to overestimate their own abilities

The more that people use AI, the more likely they are to overestimate their own abilities

New 'Dragon Hatchling' AI architecture modeled after the human brain could be a key step toward AGI, researchers claim

Latest in Artificial Intelligence

New 'Dragon Hatchling' AI architecture modeled after the human brain could be a key step toward AGI, researchers claim

Latest in Artificial Intelligence

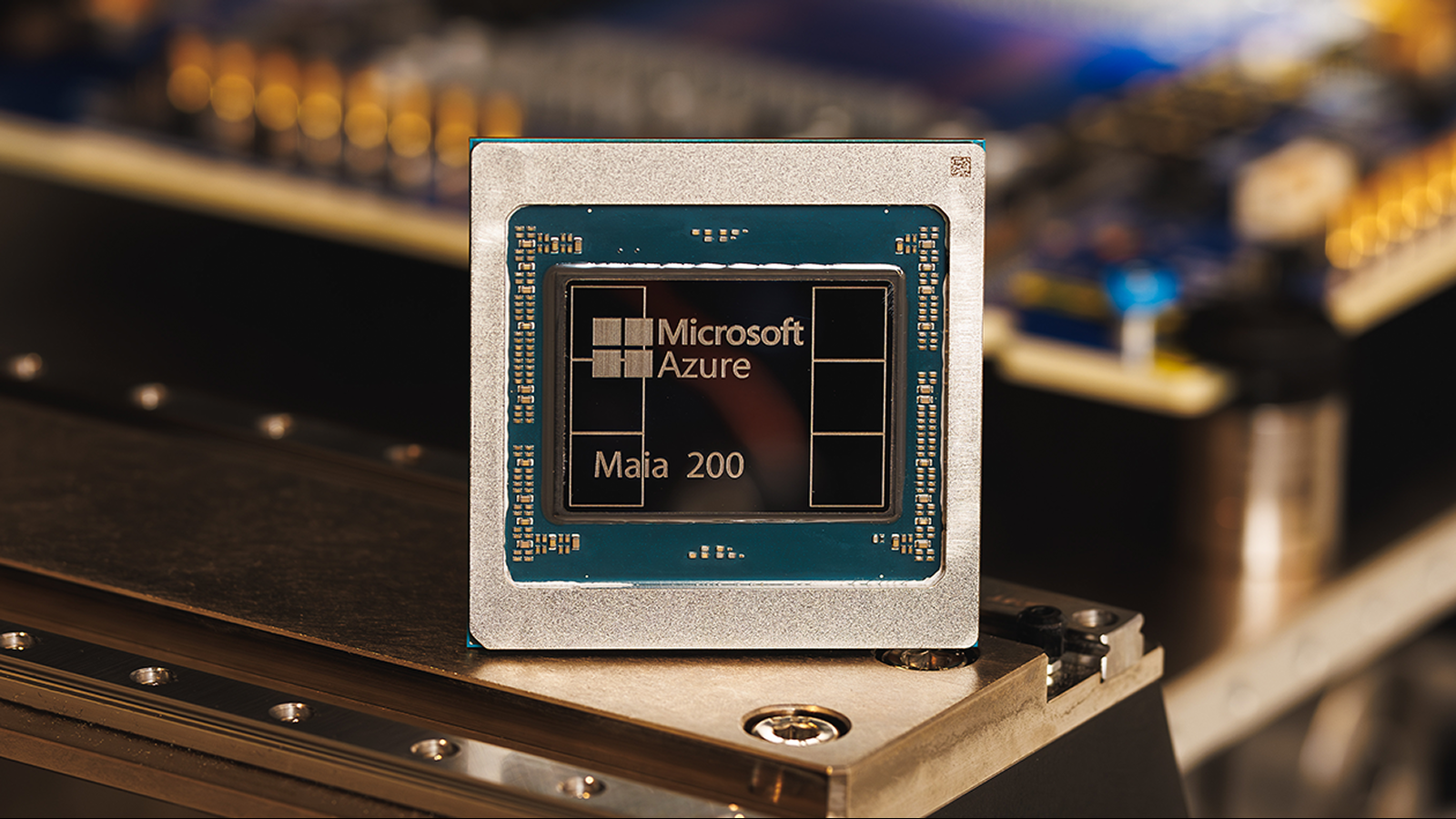

Microsoft says its newest AI chip Maia 200 is 3 times more powerful than Google's TPU and Amazon's Trainium processor

Microsoft says its newest AI chip Maia 200 is 3 times more powerful than Google's TPU and Amazon's Trainium processor

AI may accelerate scientific progress — but here's why it can't replace human scientists

AI may accelerate scientific progress — but here's why it can't replace human scientists

Indigenous TikTok star 'Bush Legend' is actually AI-generated, leading to accusations of 'digital blackface'

Indigenous TikTok star 'Bush Legend' is actually AI-generated, leading to accusations of 'digital blackface'

Even AI has trouble figuring out if text was written by AI — here's why

Even AI has trouble figuring out if text was written by AI — here's why

Do you think you can tell an AI-generated face from a real one?

Do you think you can tell an AI-generated face from a real one?

Will AI ever be more creative than humans?

Latest in News

Will AI ever be more creative than humans?

Latest in News

'Doomsday Clock' ticks 4 seconds closer to midnight

'Doomsday Clock' ticks 4 seconds closer to midnight

See February's full Snow Moon rise this weekend next to a glittering star cluster

See February's full Snow Moon rise this weekend next to a glittering star cluster

Standard model of cosmology holds up in massive 6-year study of the universe

Standard model of cosmology holds up in massive 6-year study of the universe

Creepy humanoid robot face learned to move its lips more accurately by staring at itself in the mirror, then watching YouTube

Creepy humanoid robot face learned to move its lips more accurately by staring at itself in the mirror, then watching YouTube

Days numbered for 'risky' lithium-ion batteries, scientists say, after fast-charging breakthrough in sodium-ion alternative

Days numbered for 'risky' lithium-ion batteries, scientists say, after fast-charging breakthrough in sodium-ion alternative

Shark attacks in Hawaii spike in October, and scientists think they know why

LATEST ARTICLES

Shark attacks in Hawaii spike in October, and scientists think they know why

LATEST ARTICLES 1Renpho Lynx smart ring review: Somewhat disappointing

1Renpho Lynx smart ring review: Somewhat disappointing- 2Diagnostic dilemma: Liquid-nitrogen-infused cocktail popped a man's stomach like a balloon

- 3See February's full Snow Moon rise this weekend next to a glittering star cluster

- 4'Doomsday Clock' ticks 4 seconds closer to midnight as unregulated AI and 'mirror life' threaten humanity

- 5'The dream has come true': Standard model of cosmology holds up in massive 6-year study of the universe — with one big caveat