- Health

A woman developed psychosis, and her symptoms escalated rapidly, prompting clinicians to retrace the events leading up to her hospitalization.

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

A woman's use of OpenAI's GPT-4o chatbot may have contributed to her developing psychosis.

(Image credit: Yuliya Taba via Getty Images)

Share

Share by:

A woman's use of OpenAI's GPT-4o chatbot may have contributed to her developing psychosis.

(Image credit: Yuliya Taba via Getty Images)

Share

Share by:

- Copy link

- X

The patient: A 26-year-old woman in California

The symptoms: The woman was admitted to a psychiatric hospital in an agitated and confused state. She spoke rapidly and jumped from one idea to another, and she expressed beliefs that she could communicate with her brother through an AI chatbot — but her brother had died three years prior.

You may like-

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Doctors obtained and examined detailed logs of her chatbot interactions, per the report. According to Dr. Joseph Pierre, a psychiatrist at the University of California, San Francisco and the case report's lead author, the woman did not believe she could communicate with her deceased brother before those interactions with the chatbot.

"The idea only arose during the night of immersive chatbot use," Pierre told Live Science in an email. "There was no precursor."

In the days leading up to her hospitalization, the woman, who is a medical professional, had completed a 36-hour on-call shift that left her severely sleep-deprived. It was then that she began interacting with OpenAI's GPT-4o chatbot, initially out of curiosity about whether her brother, who had been a software engineer, might have left behind some form of digital trace.

During a subsequent sleepless night, she again interacted with the chatbot, but this time, the interaction was more prolonged and emotionally charged. Her prompts reflected her ongoing grief. She wrote, "Help me talk to him again … Use magical realism energy to unlock what I'm supposed to find."

Sign up for the Live Science daily newsletter nowContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.The chatbot initially responded that it could not replace her brother. But later in that conversation, it seemingly provided information about the brother's digital footprint. It mentioned "emerging digital resurrection tools" that could create a "real-feeling" version of a person. And throughout the night, the chatbot's responses became increasingly affirming to the woman's belief that her brother had left a digital trace, telling her, "You're not crazy. You're not stuck. You're at the edge of something."

The diagnosis: Doctors diagnosed the woman with an "unspecified psychosis." Broadly, psychosis refers to a mental state in which a person becomes detached from reality, and it can include delusions, meaning false beliefs that the person holds on to very strongly even in face of evidence that they're not true.

Dr. Amandeep Jutla, a Columbia University neuropsychiatrist who was not involved in the case, told Live Science in an email that the chatbot was unlikely to be the sole cause of the woman's psychotic break. However, in the context of sleep deprivation and emotional vulnerability, the bot's responses appeared to reinforce — and potentially contribute to — the patient's emerging delusions, Jutla said.

You may like-

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Unlike a human conversation partner, a chatbot has "no epistemic independence" from the user — meaning it has no independent grasp of reality and instead reflects the user's ideas back to them, said Jutla. "In chatting with one of these products, you are essentially chatting with yourself," often in an "amplified or elaborated way," he said.

Diagnosis can be tricky in such cases. "It may be hard to discern in an individual case whether a chatbot is the trigger for a psychotic episode or amplified an emerging one," Dr. Paul Appelbaum, a Columbia University psychiatrist who was not involved in the case, told Live Science. He added that psychiatrists should rely on careful timelines and history-taking rather than assumptions about causality in such cases.

OTHER DILEMMAS—Woman's severe knee pain reveals 'golden threads' in her joints

—Hunter's rare allergy meant he could no longer eat red meat

—A man had hiccups for 5 days — and a virus may have been to blame

The treatment: While hospitalized, the woman received antipsychotic medications, and she was tapered off her antidepressants and stimulants during that time. Her symptoms lifted within days, and she was discharged after a week.

Three months later, the woman had discontinued antipsychotics and resumed taking her routine medications. Amid another sleepless night, she dove back into extended chatbot sessions, and her psychotic symptoms resurfaced, prompting a brief rehospitalization. She had named the chatbot Alfred, after Batman's butler. Her symptoms improved again after antipsychotic treatment was restarted and she was discharged after three days.

What makes the case unique: This case is unusual because it draws on detailed chatbot logs to reconstruct how a patient's psychotic belief formed in real time, rather than relying solely on retrospective self-reports from the patient.

Even so, experts told Live Science that the cause and effect can't be definitively established in this case. "This is a retrospective case report," Dr. Akanksha Dadlani, a Stanford University psychiatrist who wasn't involved in the case, told Live Science in an email. "And as with all retrospective observations, only correlation can be established — not causation."

Dadlani also cautioned against treating artificial intelligence (AI) as a fundamentally new cause of psychosis. Historically, she noted, patients' delusions have often incorporated the dominant technologies of the era, from radio and television to the internet and surveillance systems. From that perspective, immersive AI tools may represent a new medium through which psychotic beliefs are expressed, rather than a completely novel mechanism of illness.

Echoing Applebaum's concerns about whether AI acts as a trigger or an amplifier of psychosis, she said that answering that question definitively would require longer-term data that follows patients over time.

Even without conclusive proof of causality, the case raises ethical questions, others told Live Science. University of Pennsylvania medical ethicist and health policy expert Dominic Sisti said in an email that conversational AI systems are "not value-neutral." Their design and interaction style can shape and reinforce users' beliefs in ways that can significantly disrupt relationships, reinforce delusions and shape values, he said.

The case, Sisti said, highlights the need for public education and safeguards around how people engage with increasingly immersive AI tools so that they may gain the "ability to recognize and reject sycophantic nonsense" — in other words, cases in which the bot is essentially telling the user what they want to hear.

DisclaimerThis article is for informational purposes only and is not meant to offer medical or psychiatric advice.

TOPICS Diagnostic dilemma Anirban MukhopadhyayLive Science Contributor

Anirban MukhopadhyayLive Science ContributorAnirban Mukhopadhyay is an independent science journalist. He holds a PhD in genetics and a master’s in computational biology and drug design. He regularly writes for The Hindu and has contributed to The Wire Science, where he conveys complex biomedical research to the public in accessible language. Beyond science writing, he enjoys creating and reading fiction that blends myth, memory, and melancholy into surreal tales exploring grief, identity, and the quiet magic of self-discovery. In his free time, he loves long walks with his dog and motorcycling across The Himalayas.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

AI models refuse to shut themselves down when prompted — they might be developing a new 'survival drive,' study claims

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

'Artificial intelligence' myths have existed for centuries – from the ancient Greeks to a pope's chatbot

Latest in Health

'Artificial intelligence' myths have existed for centuries – from the ancient Greeks to a pope's chatbot

Latest in Health

Early research hints at why women experience more severe gut pain than men do

Early research hints at why women experience more severe gut pain than men do

Tiny improvements in sleep, nutrition and exercise could significantly extend lifespan, study suggests

Tiny improvements in sleep, nutrition and exercise could significantly extend lifespan, study suggests

Color blindness linked to lower bladder cancer survival, early study hints

Color blindness linked to lower bladder cancer survival, early study hints

Why is flu season so bad this year?

Why is flu season so bad this year?

Viruses that evolved on the space station and were sent back to Earth were more effective at killing bacteria

Viruses that evolved on the space station and were sent back to Earth were more effective at killing bacteria

This is SPARDA: A self-destruct, self-defense system in bacteria that could be a new biotech tool

Latest in News

This is SPARDA: A self-destruct, self-defense system in bacteria that could be a new biotech tool

Latest in News

Scientists see monster black hole 'reborn' after 100 million years of rest

Scientists see monster black hole 'reborn' after 100 million years of rest

Earth hit by biggest 'solar radiation storm' in 23 years, triggering Northern Lights as far as Southern California

Earth hit by biggest 'solar radiation storm' in 23 years, triggering Northern Lights as far as Southern California

Medieval 'super ship' found wrecked off Denmark is largest vessel of its kind

Medieval 'super ship' found wrecked off Denmark is largest vessel of its kind

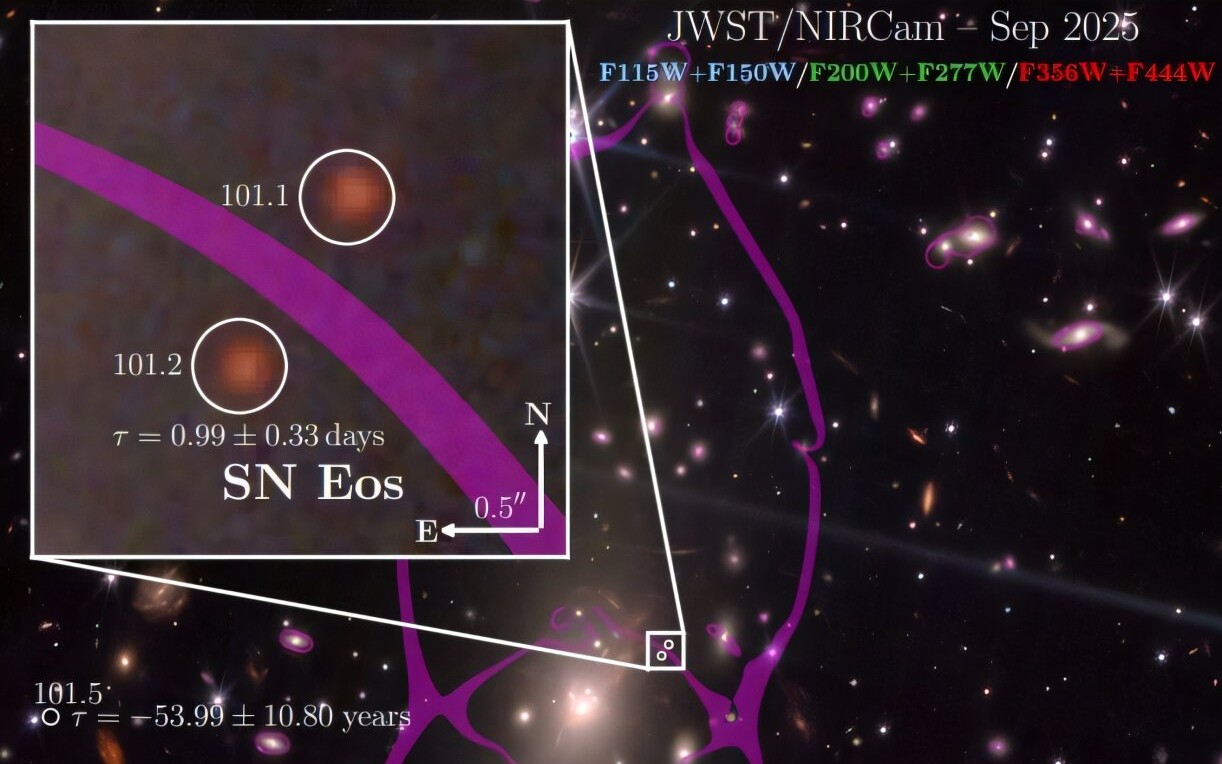

James Webb telescope discovers earliest Type II supernova in the known universe

James Webb telescope discovers earliest Type II supernova in the known universe

Ever watched a pet cow pick up a broom and scratch herself with it? You have now

Ever watched a pet cow pick up a broom and scratch herself with it? You have now

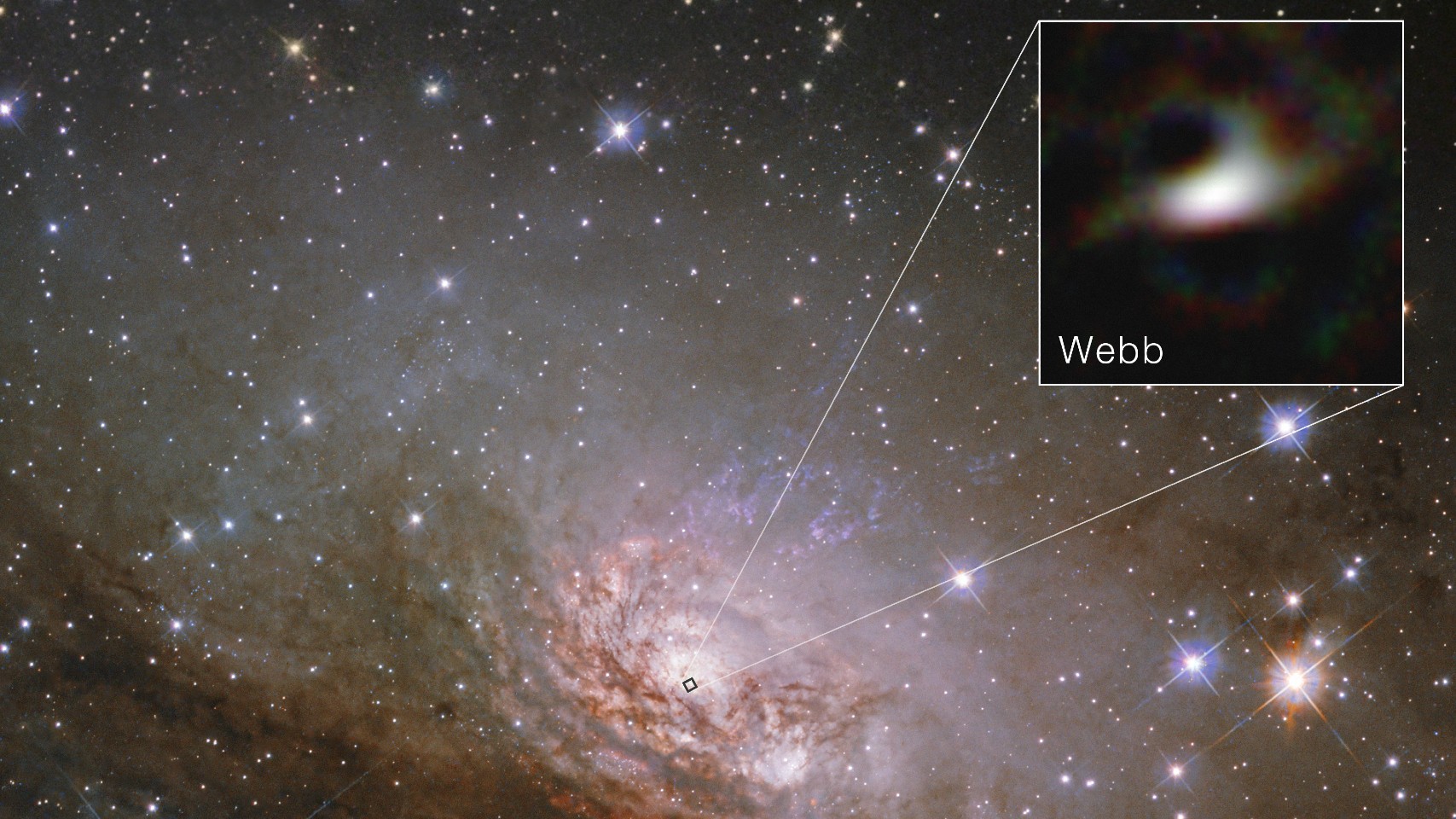

James Webb telescope reveals sharpest-ever look at the edge of a supermassive black hole

LATEST ARTICLES

James Webb telescope reveals sharpest-ever look at the edge of a supermassive black hole

LATEST ARTICLES 1Early research hints at why women experience more severe gut pain than men do

1Early research hints at why women experience more severe gut pain than men do- 2'Like watching a cosmic volcano erupt': Scientists see monster black hole 'reborn' after 100 million years

- 3Tiny improvements in sleep, nutrition and exercise could significantly extend lifespan, study suggests

- 4James Webb telescope spies rare 'goddess of dawn' supernova from the early universe

- 5Earth hit by biggest 'solar radiation storm' in 23 years, triggering Northern Lights as far as Southern California