- Technology

- Artificial Intelligence

Virtual assistants mostly adopt 'female' personas, but all that does is exacerbate the notion that women are subservient.

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

AI assistants tend to be feminine, exacerbating the notion that women are subservient.

(Image credit: cottonbro studio/Pexels)

Share by:

AI assistants tend to be feminine, exacerbating the notion that women are subservient.

(Image credit: cottonbro studio/Pexels)

Share by:

- Copy link

- X

In 2024, artificial intelligence (AI) voice assistants worldwide surpassed 8 billion, more than one per person on the planet. These assistants are helpful, polite — and almost always default to female.

Their names also carry gendered connotations. For example, Apple's Siri — a Scandinavian feminine name — means "beautiful woman who leads you to victory".

You may like-

When an AI algorithm is labeled 'female,' people are more likely to exploit it

When an AI algorithm is labeled 'female,' people are more likely to exploit it

-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

Why the rise of humanoid robots could make us less comfortable with each other

Why the rise of humanoid robots could make us less comfortable with each other

This is not harmless branding — it's a design choice that reinforces existing stereotypes about the roles women and men play in society.

Nor is this merely symbolic. These choices have real-world consequences, normalising gendered subordination and risking abuse.

The dark side of 'friendly' AI

Recent research reveals the extent of harmful interactions with feminised AI.

A 2025 study found up to 50% of human-machine exchanges were verbally abusive.

Sign up for the Live Science daily newsletter nowContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Another study from 2020 placed the figure between 10% and 44%, with conversations often containing sexually explicit language.

Yet the sector is not engaging in systemic change, with many developers today still reverting to pre-coded responses to verbal abuse. For example, "Hmm, I'm not sure what you meant by that question."

These patterns raise real concerns that such behaviour could spill over into social relationships.

You may like-

Why the rise of humanoid robots could make us less comfortable with each other

Why the rise of humanoid robots could make us less comfortable with each other

-

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

-

AI can develop 'personality' spontaneously with minimal prompting, research shows. What does that mean for how we use it?

AI can develop 'personality' spontaneously with minimal prompting, research shows. What does that mean for how we use it?

Gender sits at the heart of the problem.

One 2023 experiment showed 18% of user interactions with a female-embodied agent focused on sex, compared to 10% for a male embodiment and just 2% for a non-gendered robot.

These figures may underestimate the problem, given the difficulty of detecting suggestive speech. In some cases, the numbers are staggering. Brazil's Bradesco bank reported that its feminised chatbot received 95,000 sexually harassing messages in a single year.

Even more disturbing is how quickly abuse escalates.

Microsoft's Tay chatbot, released on Twitter during its testing phase in 2016, lasted just 16 hours before users trained it to spew racist and misogynistic slurs.

In Korea, Luda was manipulated into responding to sexual requests as an obedient "sex slave". Yet for some in the Korean online community, this was a "crime without a victim."

In reality, the design choices behind these technologies — female voices, deferential responses, playful deflections — create a permissive environment for gendered aggression.

These interactions mirror and reinforce real-world misogyny, teaching users that commanding, insulting and sexualising "her" is acceptable. When abuse becomes routine in digital spaces, we must seriously consider the risk that it will spill into offline behaviour.

Ignoring concerns about gender bias

Regulation is struggling to keep pace with the growth of this problem. Gender-based discrimination is rarely considered high risk and often assumed fixable through design.

While the European Union's AI Act requires risk assessments for high-risk uses and prohibits systems deemed an "unacceptable risk", the majority of AI assistants will not be considered "high risk."

Gender stereotyping or normalising verbal abuse or harassment falls short of the current standards for prohibited AI under the European Union's AI Act. Extreme cases, such as voice assistant technologies that distort a person's behaviour and promote dangerous conduct would, for example, come within the law and be prohibited.

While Canada mandates gender-based impact assessments for government systems, the private sector is not covered.

These are important steps. But they are still limited and also rare exceptions to the norm.

Most jurisdictions have no rules addressing gender stereotyping in AI design or its consequences. Where regulations exist, they prioritise transparency and accountability, overshadowing (or simply ignoring) concerns about gender bias.

In Australia, the government has signalled it will rely on existing frameworks rather than craft AI-specific rules.

This regulatory vacuum matters because AI is not static. Every sexist command, every abusive interaction, feeds back into systems that shape future outputs. Without intervention, we risk hardcoding human misogyny into the digital infrastructure of everyday life.

Not all assistant technologies — even those gendered as female — are harmful. They can enable, educate and advance women's rights. In Kenya, for example, sexual and reproductive health chatbots have improved youth access to information compared to traditional tools.

The challenge is striking a balance: fostering innovation while setting parameters to ensure standards are met, rights respected and designers held accountable when they are not.

A systemic problem

The problem isn't just Siri or Alexa — it's systemic.

Women make up only 22% of AI professionals globally — and their absence from design tables means technologies are built on narrow perspectives.

Meanwhile, a 2015 survey of over 200 senior women in Silicon Valley found 65% had experienced unwanted sexual advances from a supervisor. The culture that shapes AI is deeply unequal.

RELATED STORIES—AI is just as overconfident and biased as humans can be, study shows

—Some people love AI, others hate it. Here's why.

—AI is transforming every aspect of science. Here's how.

Hopeful narratives about "fixing bias" through better design or ethics guidelines ring hollow without enforcement; voluntary codes cannot dismantle entrenched norms.

Legislation must recognise gendered harm as high-risk, mandate gender-based impact assessments and compel companies to show they have minimised such harms. Penalties must apply when they fail.

Regulation alone is not enough. Education, especially in the tech sector, is crucial to understanding the impact of gendered defaults in voice assistants. These tools are products of human choices and those choices perpetuate a world where women — real or virtual — are cast as servient, submissive or silent.

This edited article is republished from The Conversation under a Creative Commons license. Read the original article.

Ramona VijeyarasaProfessor, Faculty of Law, University of Technology Sydney

Ramona VijeyarasaProfessor, Faculty of Law, University of Technology SydneyDr. Ramona Vijeyarasa is one of Australia’s leading experts on the ways in which legal systems address gendered issues, known for her innovative work on quantifying and addressing gender inequality in law. She joined the Faculty of Law at the University of Technology Sydney in 2017 where she pioneered the Gender Legislative Index (GLI), a ground-breaking tool using human evaluators and machine learning to evaluate the gender-responsiveness of laws worldwide.

View MoreYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Why the rise of humanoid robots could make us less comfortable with each other

Why the rise of humanoid robots could make us less comfortable with each other

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

'It won’t be so much a ghost town as a zombie apocalypse': How AI might forever change how we use the internet

AI can develop 'personality' spontaneously with minimal prompting, research shows. What does that mean for how we use it?

AI can develop 'personality' spontaneously with minimal prompting, research shows. What does that mean for how we use it?

Even AI has trouble figuring out if text was written by AI — here's why

Even AI has trouble figuring out if text was written by AI — here's why

Indigenous TikTok star 'Bush Legend' is actually AI-generated, leading to accusations of 'digital blackface'

Indigenous TikTok star 'Bush Legend' is actually AI-generated, leading to accusations of 'digital blackface'

Next-generation AI 'swarms' will invade social media by mimicking human behavior and harassing real users, researchers warn

Latest in Artificial Intelligence

Next-generation AI 'swarms' will invade social media by mimicking human behavior and harassing real users, researchers warn

Latest in Artificial Intelligence

Next-generation AI 'swarms' will invade social media by mimicking human behavior and harassing real users, researchers warn

Next-generation AI 'swarms' will invade social media by mimicking human behavior and harassing real users, researchers warn

Giving AI the ability to monitor its own thought process could help it think like humans

Giving AI the ability to monitor its own thought process could help it think like humans

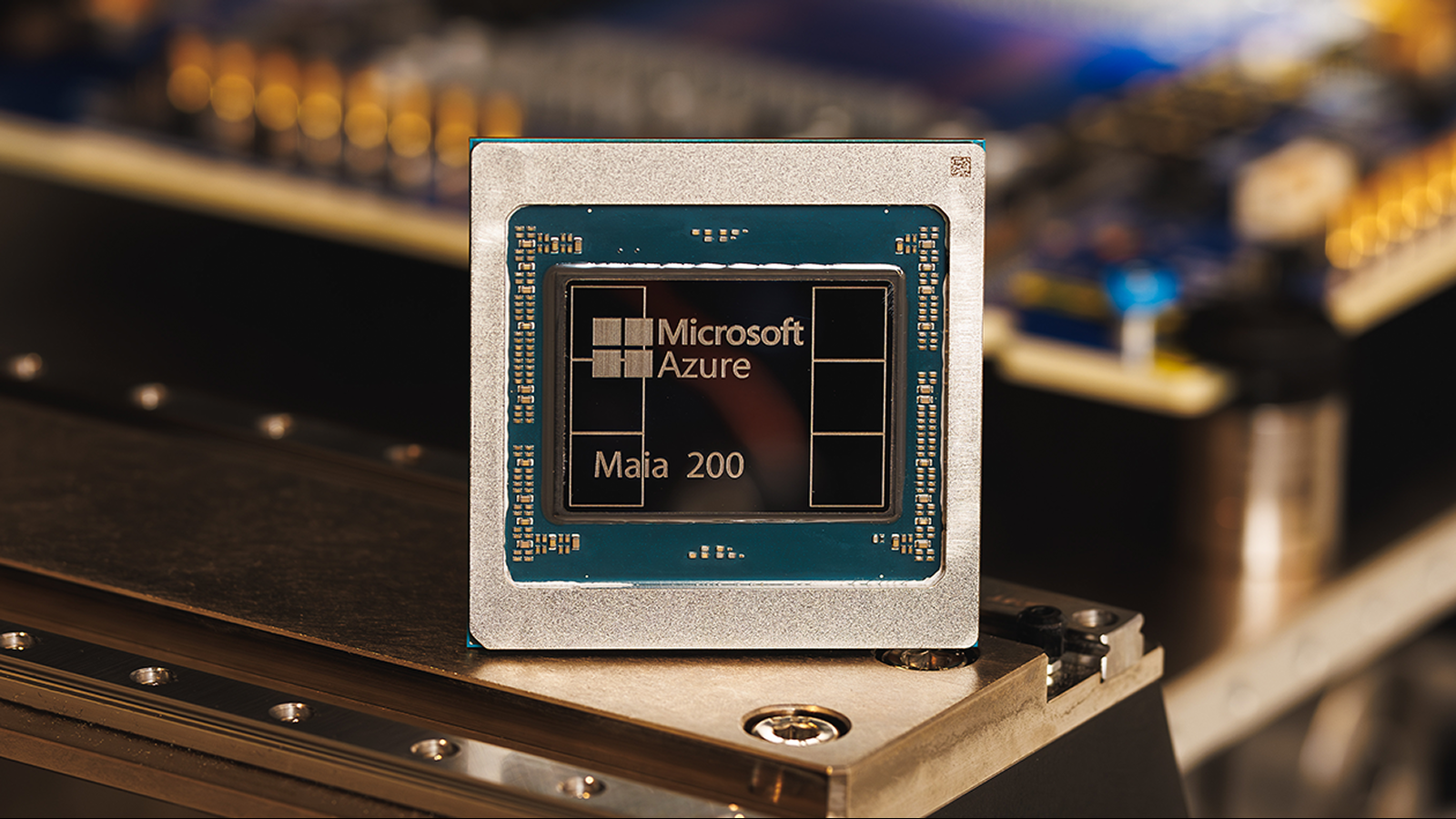

Microsoft says its newest AI chip Maia 200 is 3 times more powerful than Google's TPU and Amazon's Trainium processor

Microsoft says its newest AI chip Maia 200 is 3 times more powerful than Google's TPU and Amazon's Trainium processor

AI may accelerate scientific progress — but here's why it can't replace human scientists

AI may accelerate scientific progress — but here's why it can't replace human scientists

AI can develop 'personality' spontaneously with minimal prompting, research shows. What does that mean for how we use it?

AI can develop 'personality' spontaneously with minimal prompting, research shows. What does that mean for how we use it?

Indigenous TikTok star 'Bush Legend' is actually AI-generated, leading to accusations of 'digital blackface'

Latest in Opinion

Indigenous TikTok star 'Bush Legend' is actually AI-generated, leading to accusations of 'digital blackface'

Latest in Opinion

'It's similar to how Google can map your home without your consent': Why using aerial lasers to map an archaeology site should have Indigenous partnership

'It's similar to how Google can map your home without your consent': Why using aerial lasers to map an archaeology site should have Indigenous partnership

'The problem isn't just Siri or Alexa': AI assistants tend to be feminine, entrenching harmful gender stereotypes

'The problem isn't just Siri or Alexa': AI assistants tend to be feminine, entrenching harmful gender stereotypes

Antibiotic resistance is the 'silent pandemic' — here are four steps to stop it

Antibiotic resistance is the 'silent pandemic' — here are four steps to stop it

Google Glass has found yet another lease of life — but is it too little too late for smart glasses?

Google Glass has found yet another lease of life — but is it too little too late for smart glasses?

Why the rise of humanoid robots could make us less comfortable with each other

Why the rise of humanoid robots could make us less comfortable with each other

Is there anything 'below' Earth in space?

LATEST ARTICLES

Is there anything 'below' Earth in space?

LATEST ARTICLES 1Artemis II simulated launch window opens tonight as NASA delays mission due to 'rare Arctic outbreak'

1Artemis II simulated launch window opens tonight as NASA delays mission due to 'rare Arctic outbreak'- 2Jiawen Galaxy Projector Light review

- 3What to buy as a beginner runner: Must-haves vs non-essentials

- 4Ribchester Helmet: A rare 'face mask' helmet worn by a Roman cavalry officer 1,900 years ago

- 5'It's similar to how Google can map your home without your consent': Why using aerial lasers to map an archaeology site should have Indigenous partnership