- Technology

- Artificial Intelligence

People who played the “Prisoner’s Dilemma” were less likely to cooperate when the other player was a male human or AI, and exploited female players.

1 Comment Join the conversationWhen you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Feodora Chiosea/Getty Images)

(Image credit: Feodora Chiosea/Getty Images)

People are more likely to exploit female AI partners than male ones — showing that gender-based discrimination has an impact beyond human interactions.

A recent study, published Nov. 2 in the journal iScience, examined how people varied in their willingness to cooperate when human or AI partners were given female, nonbinary, male, and no gender labels.

Researchers asked participants to play a well-known thought experiment called the “Prisoner’s Dilemma,” a game in which two players either choose to cooperate with each other or work independently. If they cooperate, both get the best outcome.

You may like-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

The more that people use AI, the more likely they are to overestimate their own abilities

The more that people use AI, the more likely they are to overestimate their own abilities

-

AI voices are now indistinguishable from real human voices

AI voices are now indistinguishable from real human voices

But if one chooses to cooperate and the other does not, the player who did not cooperate scores better, offering an incentive for one to “exploit” the other. If they both choose not to cooperate, both players score low.

People were about 10% more likely to exploit an AI partner than a human one, the study showed. It also revealed that participants were more likely to cooperate with female, nonbinary and no-gender partners than male partners because they expected the other player to cooperate as well.

People were less likely to cooperate with male partners because they didn’t trust them to choose cooperation, the study found — especially female participants, who were more likely to cooperate with other "female" agents than male-identified agents, an effect known as "homophily."

"Observed biases in human interactions with AI agents are likely to impact their design, for example, to maximize people’s engagement and build trust in their interactions with automated systems," the researchers said in the study. "Designers of these systems need to be aware of unwelcome biases in human interactions and actively work toward mitigating them in the design of interactive AI agents."

Sign up for the Live Science daily newsletter nowContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.The risks of anthropomorphizing AI agents

When participants didn’t cooperate, it was for one of two reasons. First, they expected the other player not to cooperate and didn’t want a lower score. The second possibility is that they thought the other person would cooperate and so going solo would reduce their risk of a lower score — at the cost of the other player. The researchers defined this second option as exploitation.

Participants were more likely to "exploit" their partners when they had female, nonbinary, or no-gender labels than male ones. If their partner was AI, the likelihood of exploitation increased. Men were more likely to exploit their partners and were more likely to cooperate with human partners than AI. Women were more likely to cooperate than men, and did not discriminate between a human or AI partner.

The study did not have enough participants identifying as any gender other than female or male to draw conclusions about how other genders interact with gendered human and AI partners.

You may like-

Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

-

The more that people use AI, the more likely they are to overestimate their own abilities

The more that people use AI, the more likely they are to overestimate their own abilities

-

AI voices are now indistinguishable from real human voices

AI voices are now indistinguishable from real human voices

According to the study, more and more AI tools are being anthropomorphized (given human-like characteristics such as genders and names) to encourage people to trust and engage with them.

Anthropomorphizing AI without considering how gender-based discrimination affects people’s interactions could, however, reinforce existing biases, making discrimination worse.

RELATED STORIES—New study claims AI 'understands' emotion better than us — especially in emotionally charged situations

—Scientists discover major differences in how humans and AI 'think' — and the implications could be significant

—Researchers gave AI an 'inner monologue' and it massively improved its performance

While many of today’s AI systems are online chatbots, in the near future, people could be routinely sharing the road with self-driving cars or having AI manage their work schedules. This means we may have to cooperate with AI in the same way that we are currently expected to cooperate with other humans, making awareness of AI gender bias even more critical.

"While displaying discriminatory attitudes toward gendered AI agents may not represent a major ethical challenge in and of itself, it could foster harmful habits and exacerbate existing gender-based discrimination within our societies," the researchers added.

"By understanding the underlying patterns of bias and user perceptions, designers can work toward creating effective, trustworthy AI systems capable of meeting their users’ needs while promoting and preserving positive societal values such as fairness and justice."

Damien PineLive Science contributor

Damien PineLive Science contributorDamien Pine (he/him) is a freelance writer, artist, and former NASA engineer. He writes about science, physics, tech, art, and other topics with a focus on making complicated ideas accessible. He has a degree in mechanical engineering from the University of Connecticut, and he gets really excited every time he sees a cat.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Some people love AI, others hate it. Here's why.

Some people love AI, others hate it. Here's why.

The more that people use AI, the more likely they are to overestimate their own abilities

The more that people use AI, the more likely they are to overestimate their own abilities

AI voices are now indistinguishable from real human voices

AI voices are now indistinguishable from real human voices

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

AI could use online images as a backdoor into your computer, alarming new study suggests

AI could use online images as a backdoor into your computer, alarming new study suggests

Being mean to ChatGPT increases its accuracy — but you may end up regretting it, scientists warn

Latest in Artificial Intelligence

Being mean to ChatGPT increases its accuracy — but you may end up regretting it, scientists warn

Latest in Artificial Intelligence

Your AI-generated image of a cat riding a banana exists because of children clawing through the dirt for toxic elements. Is it really worth it?

Your AI-generated image of a cat riding a banana exists because of children clawing through the dirt for toxic elements. Is it really worth it?

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

Experts divided over claim that Chinese hackers launched world-first AI-powered cyber attack — but that's not what they're really worried about

Experts divided over claim that Chinese hackers launched world-first AI-powered cyber attack — but that's not what they're really worried about

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

The more that people use AI, the more likely they are to overestimate their own abilities

The more that people use AI, the more likely they are to overestimate their own abilities

Some people love AI, others hate it. Here's why.

Latest in News

Some people love AI, others hate it. Here's why.

Latest in News

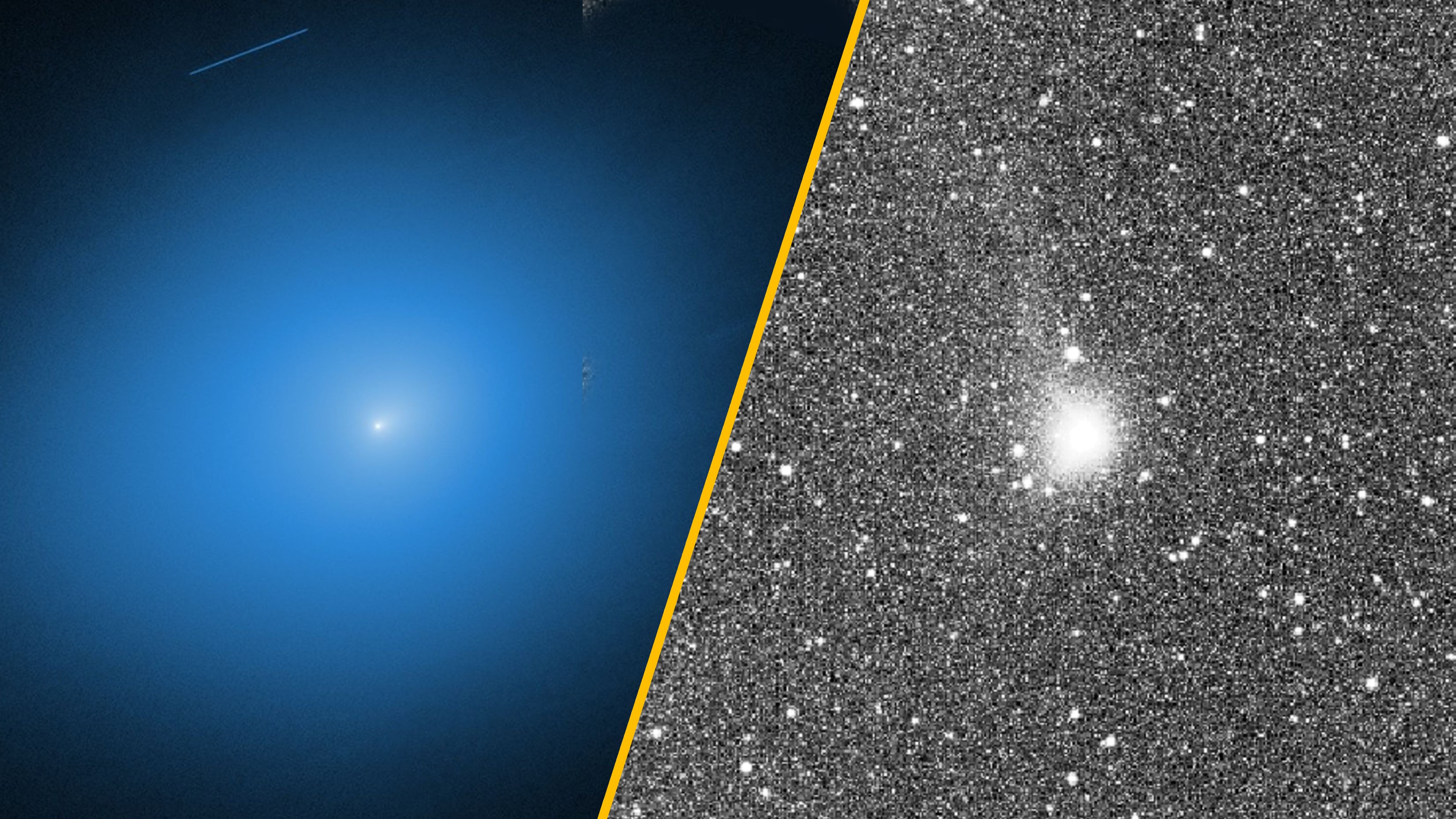

New 3I/ATLAS images show the comet getting active ahead of close encounter with Earth

New 3I/ATLAS images show the comet getting active ahead of close encounter with Earth

Ethereal structure in the sky rivals 'Pillars of Creation' — Space photo of the week

Ethereal structure in the sky rivals 'Pillars of Creation' — Space photo of the week

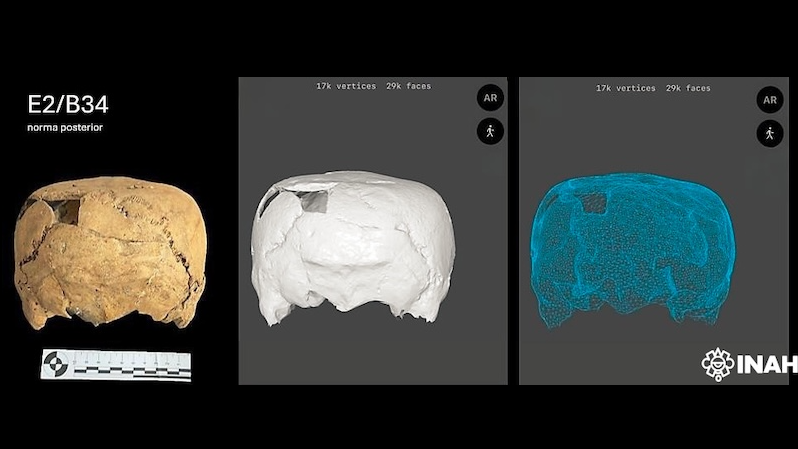

Unusual, 1,400-year-old cube-shaped human skull unearthed in Mexico

Unusual, 1,400-year-old cube-shaped human skull unearthed in Mexico

Strangely bleached rocks on Mars hint that the Red Planet was once a tropical oasis

Strangely bleached rocks on Mars hint that the Red Planet was once a tropical oasis

Lost Indigenous settlements described by Jamestown colonist John Smith finally found

Lost Indigenous settlements described by Jamestown colonist John Smith finally found

2,400-year-old 'sacrificial complex' uncovered in Russia is the richest site of its kind ever discovered

LATEST ARTICLES

2,400-year-old 'sacrificial complex' uncovered in Russia is the richest site of its kind ever discovered

LATEST ARTICLES 1Lost Indigenous settlements described by Jamestown colonist John Smith finally found

1Lost Indigenous settlements described by Jamestown colonist John Smith finally found- 2Strangely bleached rocks on Mars hint that the Red Planet was once a tropical oasis

- 32,400-year-old 'sacrificial complex' uncovered in Russia is the richest site of its kind ever discovered

- 4Ethereal structure in the sky rivals 'Pillars of Creation' — Space photo of the week

- 5What was the loudest sound ever recorded?