- Health

- Ageing

Designed to assist rather than replace doctors, a new autonomous tool scans clinical notes to highlight patients who may need urgent follow-up for cognitive decline and potential dementia.

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

A new AI system is designed to spot potential red flags for cognitive decline in doctors' notes about their patients.

(Image credit: Getty Images)

Share

Share by:

A new AI system is designed to spot potential red flags for cognitive decline in doctors' notes about their patients.

(Image credit: Getty Images)

Share

Share by:

- Copy link

- X

The earliest signs of cognitive decline often appear not in a formal diagnosis, but in the small clues buried in health care providers' notes.

A new study published Jan. 7 in the journal npj Digital Medicine suggests artificial intelligence (AI) can help identify these early signals — such as issues with memory and thinking or changes in behavior — by scanning doctor's notes for patterns of concern. These might include recurring mentions of cognitive changes or confusion from the patient, or worries mentioned by family members attending the appointment with their loved one.

You may like-

Giving AI the ability to monitor its own thought process could help it think like humans

Giving AI the ability to monitor its own thought process could help it think like humans

-

A woman experienced delusions of communicating with her dead brother after late-night chatbot sessions

A woman experienced delusions of communicating with her dead brother after late-night chatbot sessions

-

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

"The goal is not to replace clinical judgment but to function as a screening aid," study co-author Dr. Lidia Moura, an associate professor of neurology at Massachusetts General Hospital, told Live Science. By highlighting such patients, she said, the system could help clinicians decide which people to follow up with, especially in settings where specialists are in short supply.

Whether that kind of screening actually helps patients depends on how it is used, said Julia Adler-Milstein, a health informatician at the University of California, San Francisco who was not involved in the study. "If the flags are accurate, go to the right person on the care team and are actionable, meaning they lead to a clear next step, then yes, they can be easily integrated into the clinical workflow," she told Live Science in an email.

A team of AI agents, not just one

To build their new AI system, the researchers used what they call an "agentic" approach. This term refers to a coordinated set of AI programs — five, in this case — that each have a specific role and review one another's work. Together, these collaborating agents iteratively refined how the system interpreted clinical notes without human input.

The researchers built the system on Meta's Llama 3.1 and gave it three years of doctors' notes to study, including clinic visits, progress notes and discharge summaries. These came from a hospital registry and had already been reviewed by clinicians who noted whether cognitive concerns were present in a given patient's chart.

Sign up for the Live Science daily newsletter nowContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.The team first showed the AI a balanced set of patient notes, half with documented cognitive concerns and half without, and let it learn from its mistakes as it tried to match how clinicians had labeled those records. By the end of that process, the system agreed with the clinicians about 91% of the time.

The finalized system was then tested on a separate subset of data that it hadn't seen before, but that was pulled from the same three-year dataset. The second dataset was meant to reflect real-world care, so only about one-third of the records were labelled by clinicians as showing cognitive concern.

In that test, the system's sensitivity fell to about 62%, meaning it missed nearly four in ten cases clinicians had marked as positive for signs of cognitive decline.

You may like-

Giving AI the ability to monitor its own thought process could help it think like humans

Giving AI the ability to monitor its own thought process could help it think like humans

-

A woman experienced delusions of communicating with her dead brother after late-night chatbot sessions

A woman experienced delusions of communicating with her dead brother after late-night chatbot sessions

-

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

At first glance, the drop in accuracy looked like failure — until the researchers reexamined the medical records that the AI and human reviewers had classified differently.

Clinical experts reviewed these instances by rereading the medical records themselves, and did so without knowing whether the classification had come from clinicians or the AI. In 44% of cases, these reviewers ultimately sided with the system's assessment rather than the original chart review conducted by a doctor.

"That was one of the more surprising findings," said study co-author Hossein Estiri, an associate professor of neurology at Massachusetts General Hospital.

In many of those cases, he said, the AI applied clinical definitions more conservatively than doctors did, declining to flag concerns when notes didn't directly describe memory problems, confusion or other changes in how the patient was thinking — even if a diagnosis of cognitive decline was listed elsewhere in the record. The AI was trained to prioritize mentions of potential cognitive concerns, essentially, which doctors might not always flag as important in the moment.

The results highlight the limits of manual chart review by doctors, Moura said. "When the signals are obvious, everyone sees them," she said. "When they're subtle, that's where humans and machines can diverge."

Karin Verspoor, a researcher in AI and health technologies at RMIT University who was not involved in the study, said the system was evaluated on a carefully curated, clinician-reviewed set of doctors' notes. But because the data came from a single hospital network, she cautioned that its accuracy may not translate to settings where documentation practices differ.

RELATED STORIES—There's a new blood test for Alzheimer's. Here's everything you need to know about it.

—Man nearly guaranteed to get early Alzheimer's is still disease-free in his 70s — how?

—Nearly half of global dementia cases could be delayed or prevented, scientists say

The system's vision is limited by the quality of the notes it reads, she said, and that constraint that can be addressed only through optimizing the system across diverse clinical settings, she argued.

Estiri explained that, for now, the system is intended to run quietly in the background of routine doctors' visits, surfacing potential concerns alongside an explanation of how it reached them. That said, it is not yet being used in clinical practice.

"The idea is not that doctors are sitting there using AI tools," he said, "but that the system provides insight — what we're seeing, and why — as part of the clinical record itself."

Article SourcesTian, J., Fard, P., Cagan, C. et al. An autonomous agentic workflow for clinical detection of cognitive concerns using large language models. npj Digit. Med. 9, 51 (2026). https://doi.org/10.1038/s41746-025-02324-4

Anirban MukhopadhyayLive Science Contributor

Anirban MukhopadhyayLive Science ContributorAnirban Mukhopadhyay is an independent science journalist. He holds a PhD in genetics and a master’s in computational biology and drug design. He regularly writes for The Hindu and has contributed to The Wire Science, where he conveys complex biomedical research to the public in accessible language. Beyond science writing, he enjoys creating and reading fiction that blends myth, memory, and melancholy into surreal tales exploring grief, identity, and the quiet magic of self-discovery. In his free time, he loves long walks with his dog and motorcycling across The Himalayas.

Show More CommentsYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Giving AI the ability to monitor its own thought process could help it think like humans

Giving AI the ability to monitor its own thought process could help it think like humans

A woman experienced delusions of communicating with her dead brother after late-night chatbot sessions

A woman experienced delusions of communicating with her dead brother after late-night chatbot sessions

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

Switching off AI's ability to lie makes it more likely to claim it's conscious, eerie study finds

The more that people use AI, the more likely they are to overestimate their own abilities

The more that people use AI, the more likely they are to overestimate their own abilities

Scientists are developing a 'self-driving' device that helps patients recover from heart attacks

Scientists are developing a 'self-driving' device that helps patients recover from heart attacks

AI may accelerate scientific progress — but here's why it can't replace human scientists

Latest in Ageing

AI may accelerate scientific progress — but here's why it can't replace human scientists

Latest in Ageing

Gray hair may have evolved as a protection against cancer, study hints

Gray hair may have evolved as a protection against cancer, study hints

Aging and inflammation may not go hand in hand, study suggests

Aging and inflammation may not go hand in hand, study suggests

'Aging clocks' can predict your risk of disease and early death. Here's what to know.

'Aging clocks' can predict your risk of disease and early death. Here's what to know.

Aging: What happens to the body as it gets older?

Aging: What happens to the body as it gets older?

Brain scans could reveal your true biological age

Brain scans could reveal your true biological age

'I was floored by the data': Psilocybin shows anti-aging properties in early study

Latest in News

'I was floored by the data': Psilocybin shows anti-aging properties in early study

Latest in News

New triple-drug treatment stops pancreatic cancer in its tracks, a mouse study finds

New triple-drug treatment stops pancreatic cancer in its tracks, a mouse study finds

'Part of the evolutionary fabric of our societies': Same-sex sexual behavior in primates may be a survival strategy, study finds

'Part of the evolutionary fabric of our societies': Same-sex sexual behavior in primates may be a survival strategy, study finds

More than 43,000 years ago, Neanderthals spent centuries collecting animal skulls in a cave; but archaeologists aren't sure why

More than 43,000 years ago, Neanderthals spent centuries collecting animal skulls in a cave; but archaeologists aren't sure why

Halley wasn't the first to figure out the famous comet. An 11th-century monk did it first, new research suggests.

Halley wasn't the first to figure out the famous comet. An 11th-century monk did it first, new research suggests.

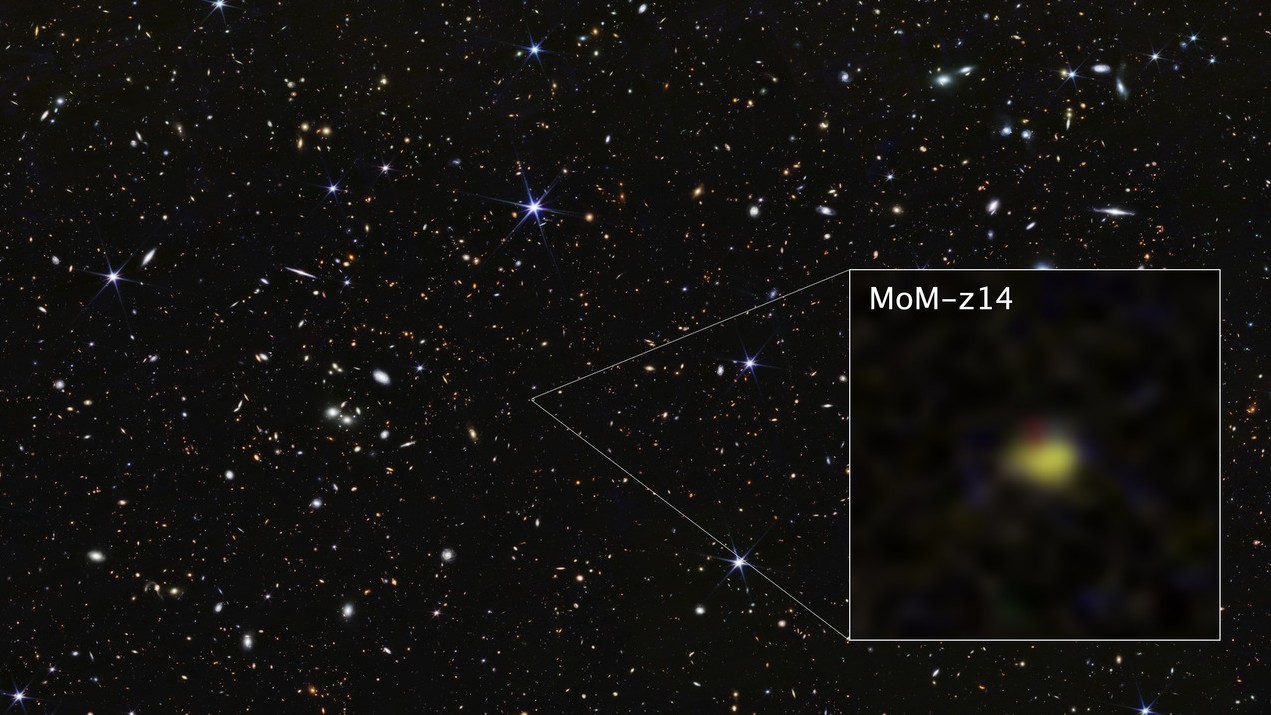

James Webb telescope breaks own record, discovering farthest known galaxy in the universe

James Webb telescope breaks own record, discovering farthest known galaxy in the universe

South Carolina's measles outbreak nears 790 cases — making it the biggest in decades

LATEST ARTICLES

South Carolina's measles outbreak nears 790 cases — making it the biggest in decades

LATEST ARTICLES 1Thousands of dams in the US are old, damaged and unable to cope with extreme weather. How bad is it?

1Thousands of dams in the US are old, damaged and unable to cope with extreme weather. How bad is it?- 2'Part of the evolutionary fabric of our societies': Same-sex sexual behavior in primates may be a survival strategy, study finds

- 3More than 43,000 years ago, Neanderthals spent centuries collecting animal skulls in a cave; but archaeologists aren't sure why

- 4Watch awkward Chinese humanoid robot lay it all down on the dance floor

- 5The Snow Moon will 'swallow' one of the brightest stars in the sky this weekend: Where and when to look